What is Data Smoothing?

Data smoothing is a statistical technique that involves removing outliers from a data set in order to make a pattern more visible.

How Does Data Smoothing Work?

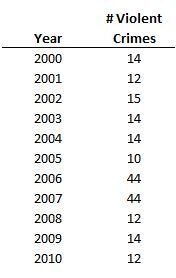

For example, let's say that a university is analyzing its crime data over the past 10 years. The number of violent crimes looks something like this:

As you can see, most of the time the university experiences fewer than 15 crimes a year. However, in 2006 and 2007, it experienced 44 due to an experimental reporting requirement by the university's public safety team. The reporting experiment changed the definition of violent crime to include thefts of any kind during those years, which created a big jump in the number of 'violent' crimes on campus. If we include these years in the average -- that is, if we do some data smoothing -- the university experienced an average of about 19 violent crimes a year. But if we leave those years out, we can see that a more realistic average is 13 violent crimes a year -- a 32% difference.

Why Does Data Smoothing Matter?

There are many ways to smooth data, including using moving averages and algorithms. The idea is that data smoothing makes patterns more visible and thus aids in forecasting changes in stock prices, customer trends or any other piece of business information. However, data smoothing can overlook key information or make important facts less visible; in other words, 'rounding off the edges' of data can overemphasize certain data and ignore other data.